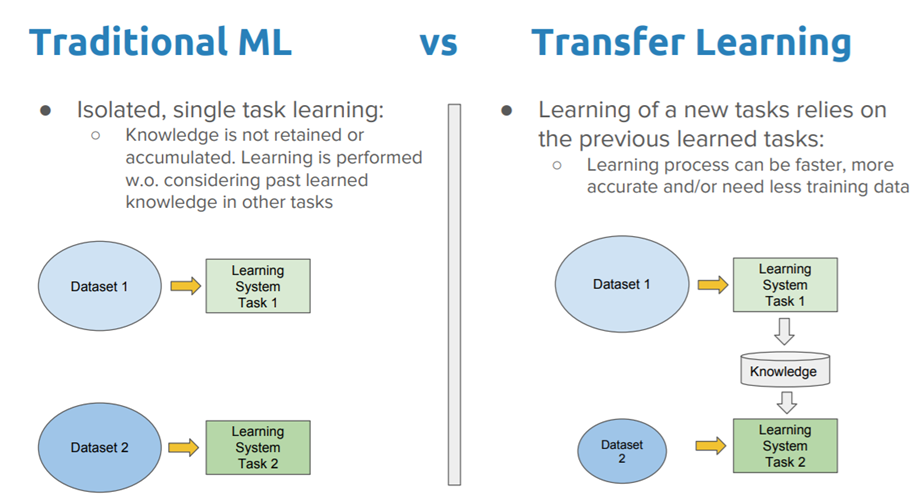

Let’s just start off with an intuitive understanding of what transfer learning is by asking the following questions:

What happens when we try to learn things?

Are we starting from ground zero?

Introduction

The Deep Learning Book by Ian Goodfellow et al. defines transfer learning as follows:

Situation where what has been learned in one setting is exploited to improve generalization in another setting.

Basically, the goal of transfer learning is to leverage the knowledge learned in a prior task to improve learning in a target task.

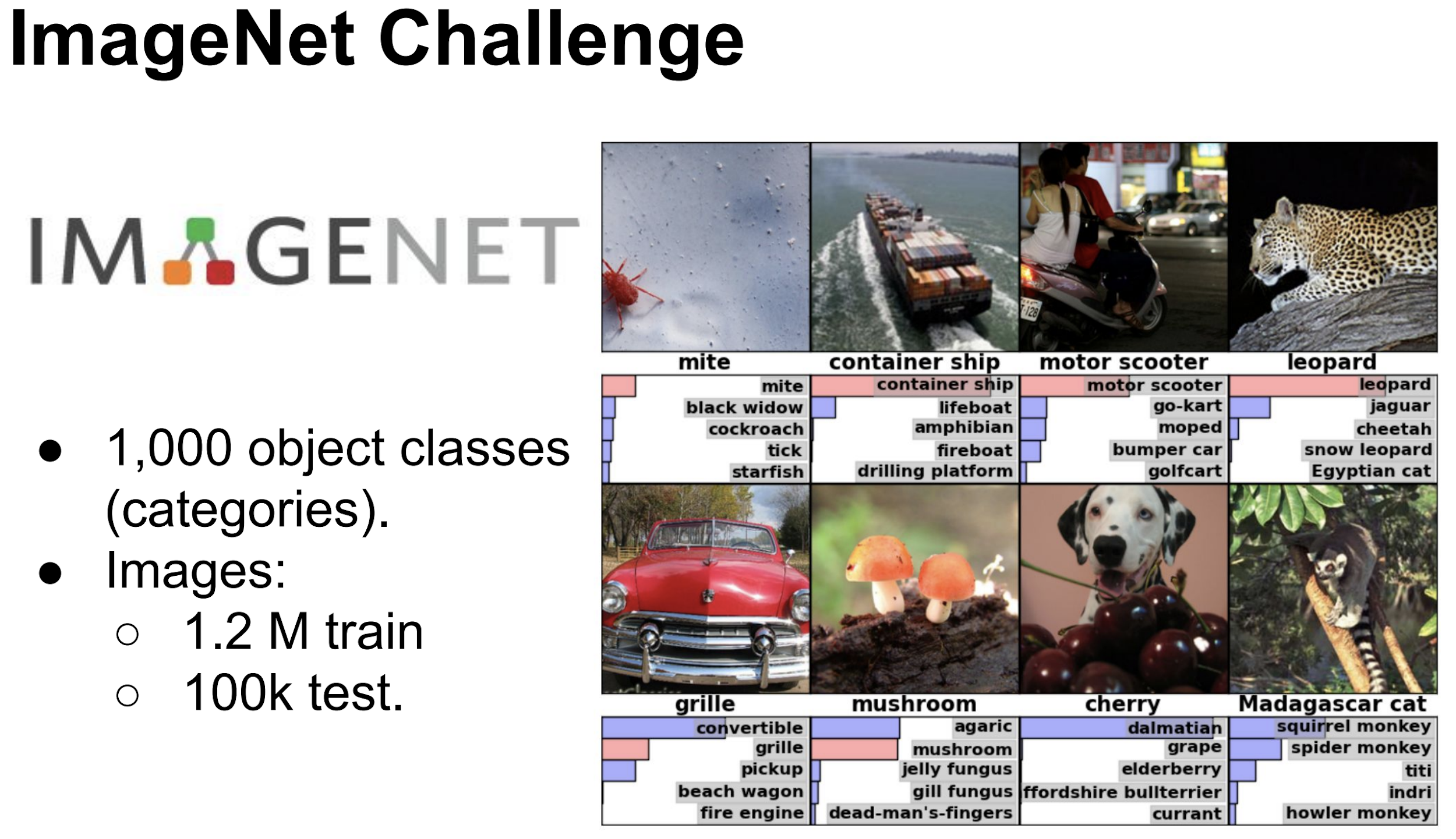

The example we will explore today revolves around ImageNet and MobileNet.

But first let’s check out an application of transfer learning: google’s teachable machine.

Background

What is ImageNet?

Why is it so popular?

What is MobileNet?

Here’s a review of convolutional neural networks.

And here’s a good site to mess around with convolutional neural networks and visualize the layers.

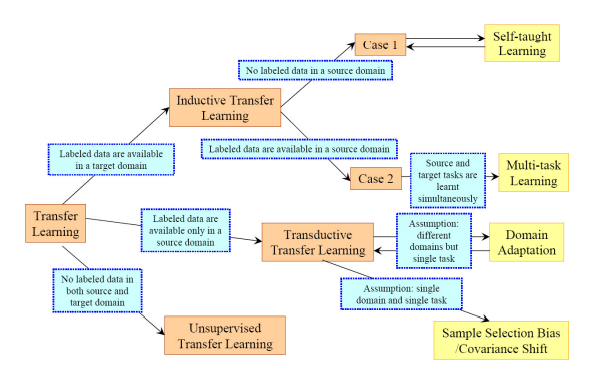

Transfer Learning Methods

There are three main that should be used.

Inductive Transfer Learning: This is what we will be doing today. Basically, we know what we have looked at and we know what we want to look at.

The Path to Hotdog Not Hotdog

Let’s start off by gathering the pictures from ImageNet. Open a new colab journal and enter the following code:

!pip install imagenetscraper

Then to download the photos into a given directory:

# Usage: imagenetscraper [OPTIONS] SYNSET_ID [OUTPUT_DIR]

# Options:

# -c, --concurrency INTEGER Number of concurrent downloads (default: 8).

# -s, --size WIDTH,HEIGHT If specified, images will be rescaled to the

# given size.

# -q, --quiet Suppress progress output.

# -h, --help Show this message and exit.

# --version Show the version and exit.

#

!imagenetscraper n07697537 hotdog

!imagenetscraper n00021265 nothotdog

Extensions

Let’s play with flask!